1. FAIR Evaluator tool¶

1.1. Ingredients¶

Ingredient |

Type |

Comment |

|---|---|---|

data communication protocol |

||

policy |

||

redirection service |

||

identifier minting service; identifier resolution service |

||

identifier minting service; identifier resolution service |

||

identifier minting service |

based on Handle system |

|

identifier resolution service |

||

identifier resolution service |

||

identifier resolution service |

||

FAIR assessment |

||

FAIR assessment |

||

model |

Actions.Objectives.Tasks |

Input |

Output |

|---|---|---|

1.2. Objectives¶

Perform an automatic assessment of a dataset against the FAIR principles 1 expressed as nanopublications using the FAIREvaluator 2.

Obtain human and machine-readable reports highlighting strengths and weaknesses with respect to FAIR.

1.3. Step by Step Process¶

1.3.1. Loading FAIREvaluator web application¶

Navigate the FAIREvaluator tool, which can be accessed via the following 2 addresses:

Fig. 1.3 the FAIREvaluator Home page¶

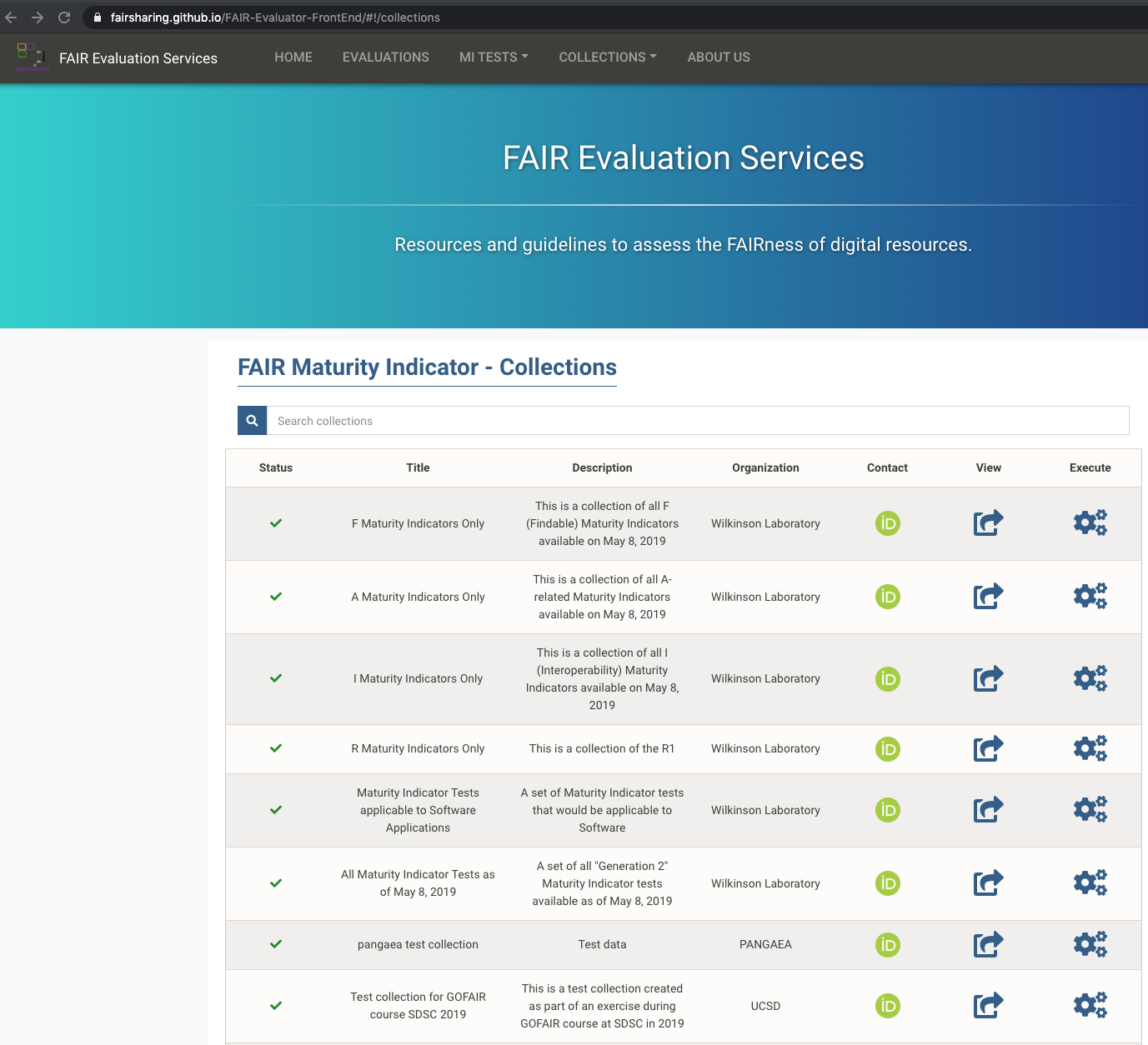

1.3.2. Understanding the FAIR indicators¶

In order the run the FAIREvaluator, it is important to understand to notion of FAIR indicators (formerly referred to as FAIR metrics).

One may browse the list of currently community defined indicators from the Collections page

Fig. 1.4 Select a ‘FAIR Maturity Indicator - Collections’¶

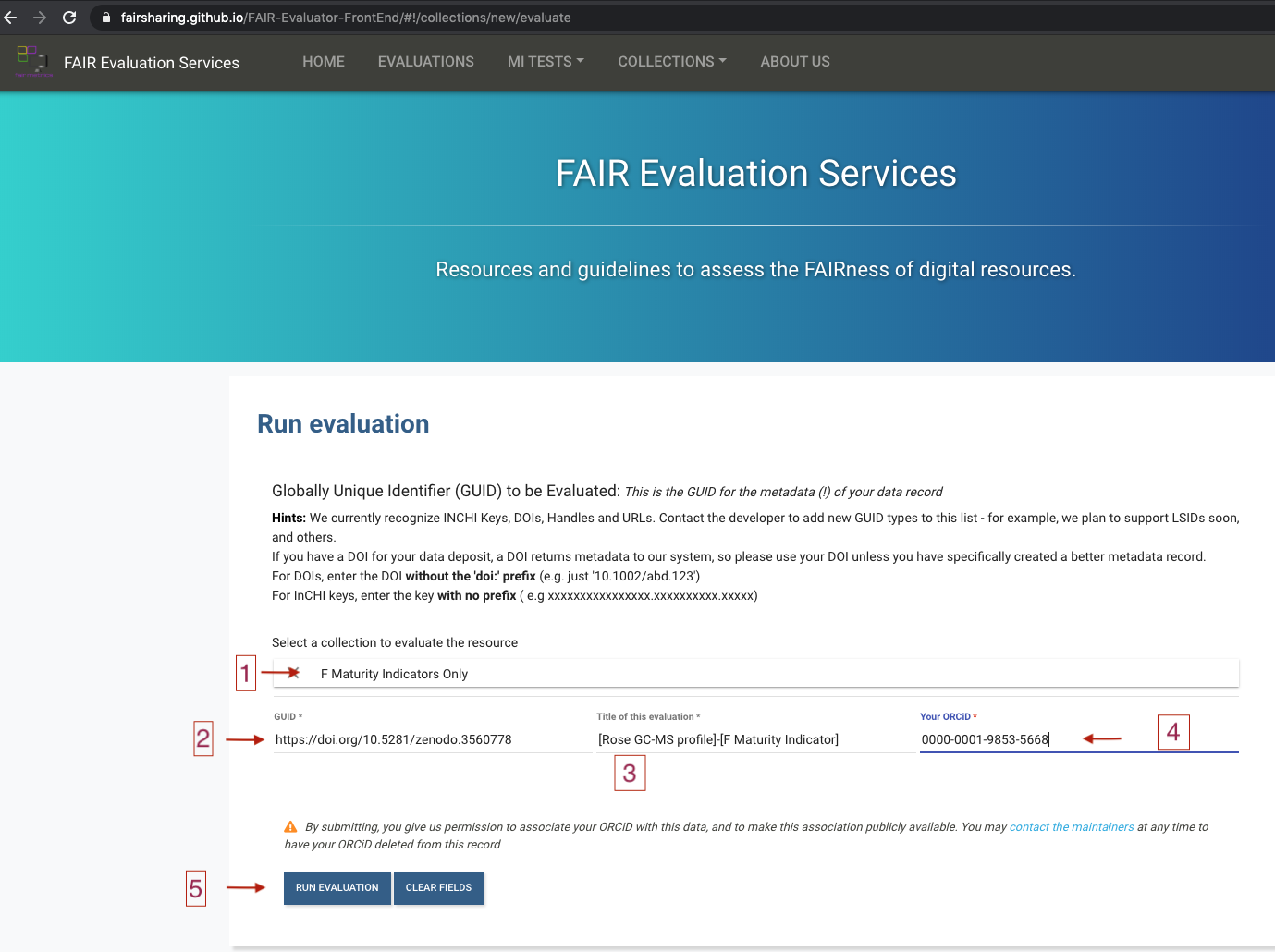

1.3.3. Preparing the input information¶

To run an evaluation, the FAIREvaluator needs to following 5 inputs from users:

a collection of FAIR indicators, selected from the list described above.

a globally unique, persistent, resolvable identifier for the resource to be evaluated.

a title for the evaluation. Enforce a naming convention to make future searches easier as these evaluations are saved.

a person identifier in the form of an ORCID.

Fig. 1.5 Running the FAIREvaluator - part 1: setting the input¶

1.3.4. Running the FAIREvaluator¶

Hit the ‘Run Evaluation’ button from ‘https://fairsharing.github.io/FAIR-Evaluator-FrontEnd/#!/collections/new/evaluate’ page

Fig. 1.6 Running the FAIREvaluator - part 2: execution¶

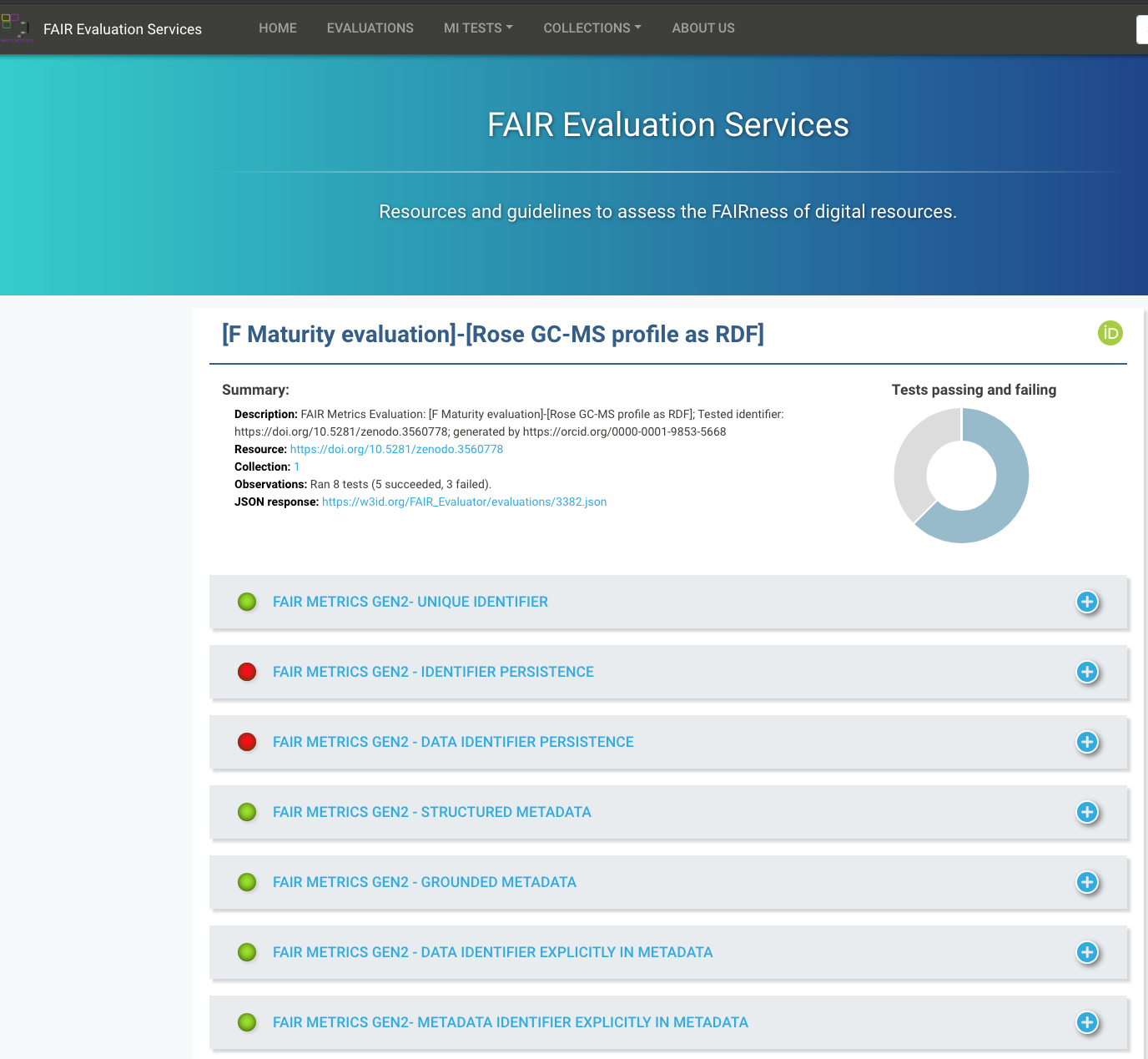

1.3.5. Analysing the FAIREvaluator report¶

Following execution of the FAIREvaluator, a detail report is generated.

Fig. 1.7 FAIREvaluator report - overall report¶

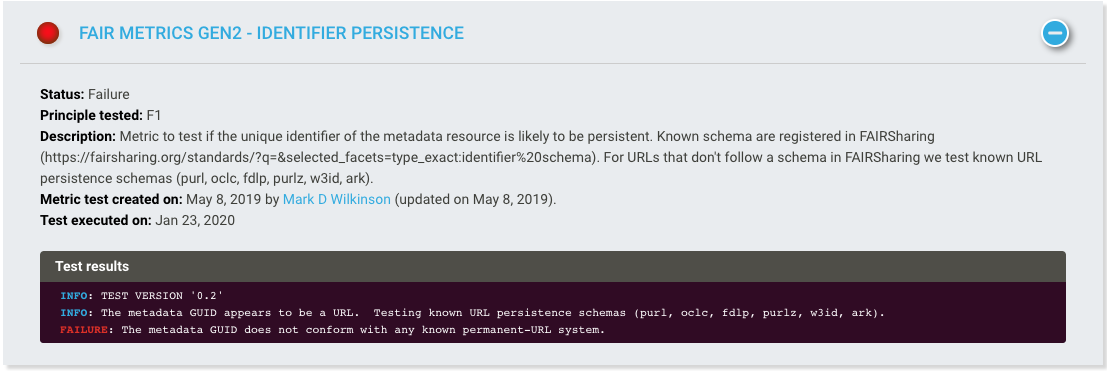

Time to dig into the details and figure out the reasons why some indicators are reporting a failure:

Fig. 1.8 apparently a problem with identifier persistence if using DOI, which are URN rather than URL sensu stricto¶

1.4. Conclusion¶

Using software tools to assess FAIR maturity constitutes an essential activity to ensure processes and capabilities actually deliver and claims can be checked. Furthermore, only automation is able to cope with the scale and volumes of assets to evaluate. The software-based evaluations are repeatable, reproducible and free of bias (other than those that may be related to definitions of the FAIR indicators themselves). These are also more demanding in terms of technical implementation and knowledge. Services such as the FAIRevaluator are essential to gauge improvements of data management services and for helping developers build FAIR services and data.

1.5. Reference¶

References

- 1

M. D. Wilkinson, M. Dumontier, I. J. Aalbersberg, G. Appleton, M. Axton, A. Baak, N. Blomberg, J. W. Boiten, L. B. da Silva Santos, P. E. Bourne, J. Bouwman, A. J. Brookes, T. Clark, M. Crosas, I. Dillo, O. Dumon, S. Edmunds, C. T. Evelo, R. Finkers, A. Gonzalez-Beltran, A. J. Gray, P. Groth, C. Goble, J. S. Grethe, J. Heringa, P. A. ‘t Hoen, R. Hooft, T. Kuhn, R. Kok, J. Kok, S. J. Lusher, M. E. Martone, A. Mons, A. L. Packer, B. Persson, P. Rocca-Serra, M. Roos, R. van Schaik, S. A. Sansone, E. Schultes, T. Sengstag, T. Slater, G. Strawn, M. A. Swertz, M. Thompson, J. van der Lei, E. van Mulligen, J. Velterop, A. Waagmeester, P. Wittenburg, K. Wolstencroft, J. Zhao, and B. Mons. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data, 3:160018, Mar 2016.

- 2

M. D. Wilkinson, M. Dumontier, S. A. Sansone, L. O. Bonino da Silva Santos, M. Prieto, D. Batista, P. McQuilton, T. Kuhn, P. Rocca-Serra, M. Crosas, and E. Schultes. Evaluating FAIR maturity through a scalable, automated, community-governed framework. Sci Data, 6(1):174, 09 2019.

1.6. Authors¶

Authors

Name |

ORCID |

Affiliation |

Type |

ELIXIR Node |

Contribution |

|---|---|---|---|---|---|

University of Oxford |

Writing - Original Draft |

||||

University of Oxford |

Writing - Review & Editing |